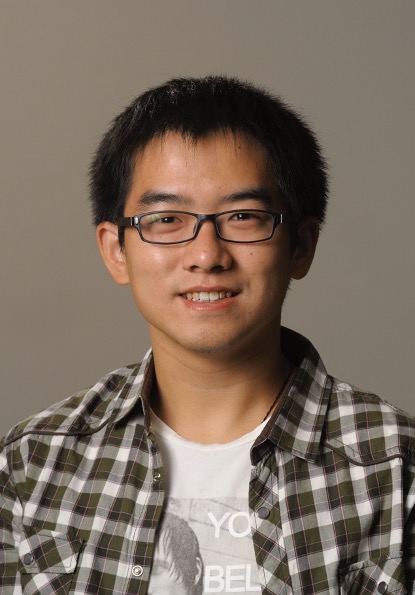

Zhangyang “Atlas” Wang (University of Texas at Austin & Amazon Research)

Professor Zhangyang “Atlas” Wang is currently the Jack Kilby/Texas Instruments Endowed Assistant Professor in the Department of Electrical and Computer Engineering at The University of Texas at Austin, leading the VITA group (https://vita-group.github.io/). He also holds a visiting researcher position at Amazon. He received his Ph.D. degree in ECE from UIUC in 2016, advised by Professor Thomas S. Huang; and his B.E. degree in EEIS from USTC in 2012. Prof. Wang has broad research interests spanning from the theory to the application aspects of machine learning. Most recently, he studies automated machine learning (AutoML), learning to optimize (L2O), robust learning, efficient learning, and graph neural networks. His research is gratefully supported by NSF, DARPA, ARL, ARO, IARPA, DOE, as well as dozens of industry and university grants. His students and himself have received many research awards and scholarships, as well as media coverage.

Short Abstract: Prior arts have observed that appropriate post-training compression, in particular pruning, can improve the empirical robustness of deep neural networks (NNs), potentially owing to removing spurious correlations while preserving the predictive accuracies. In this talk, I will introduce our recent findings extending this line of research: (i) sparsity can be injected into adversarial training, in either static mask or dynamic sparsity forms, to reduce the robust generalization gaps (e.g., "robust overfitting"), besides significantly saving training and inference FLOPs; (ii) pruning can further improve certified robustness for ReLU-based NNs in general, under the complete verification setting, and can scale up complete verification to large adversarially trained models.